Atari Punk Paddle

For many years now I’ve been having my students build an Atari Punk Console (stepped-tone generator) as one of their first assignments in my Interaction Design course. This is a great project for many reasons:

- It’s easy for a beginner to build and debug in a single course meeting

- you need to learn about all the major electronics components and principles

- It makes a satisfying sound and is fun to play (to a point)

- It connects novices with both electronics history and a contemporary culture

- You can house it so many different ways and…

- By swapping out the potentiometers for other variable resistors (sensors) you can make it react to all sorts of user and environmental actions and that’s the basis of interactivity

So clearly, I’m a big fan. However, for the longest time I’ve been using a breadboard version I built many years ago to demo the project. This works because students see how the connections are done in a breadboard, which is helpful if you’ve never used one before. Mine also uses two 555 timers instead of one 556, so students can’t just straight-up copy it without thinking. The problem is that it’s just not that much fun to demo. So, I decided it was time to build a new one in a proper housing.

Despite all the attention this little circuit gets and all the variation built around it, I’ve only seen a few examples using the Atari 2600 Paddle Controller. One simply uses two of them as control knobs, and another one uses a photoresistor as the second control. I felt like it deserved something better. The paddle controller has a built-in 1Mohm pot that you don’t even have to remove or swap (assuming it still works) so you can actually build on top of the original hardware. Plus the ergonomics of the paddle are awesome. This really feels like a musical instrument, it’s natural to hold and the motion is far smoother than the sounds.

So without further ado, here is my new Atari Punk Paddle:

There’s a video demo at the bottom, but let me explain some of the awesome features here. First, as I mentioned, I used the original pot and most of the original case. I did cut the cord and replace it with a Y-connector that I made, one side for 9V input and one for Line-out, it’s classic mono, but could easily have been stereo.

The best part is the button. Of course the APC does not traditionally have a button. Yes some people add them in as on on/off or to cut the sound to get a more percussive effect. I took a different approach and used the physical plastic button as the knob on a sliding 1Mohm pot. You can see it better in the second picture below:

i had to **very carefully** dremel just about 1/8th of an inch off of an 1.5in section of the paddle plastic to accommodate the total range of motion for the sliding pot, but that’s the only modification I had to make to the case (there is a ton of room in there). I also had to slice the button in half (it’s bulky) and ditch the spring mechanism and momentary switch. Getting it to stay in place was also fairly straightforward as the slide is big and sits nicely atop a small pillow of hot glue so it stays in just the right place. The slide is also a Logarithmic (audio) taper, while the other is linear. I choose the log pot because I wasn’t sure how comfortable it would be to slide the button all the way down the side (it’s not really that big of a deal after all) so I wanted all the audio action to be closer to where the natural button resides. This way when you hold it it barely feels any different than the original paddle. It’s a real blast to play it. I’m very satisfied.

Escaping Education

I’ve done a couple of Escape Rooms over the past few months, and I always find them to be pretty engaging and revealing experiences. From the in situ problem-solving to the ad hoc collaborations to post-experience discussions, I think I always come away a little wiser about myself. Some friends of mine at the university library and I have been kicking around the idea of building our own ER, not just for fun, but as a new kind of library service. This is what we’re thinking…

These rooms always have some kind of theme, ancient treasure, the wild west, a spaceship, etc. these are great hooks for the everyday entertainment crowd (who hasn’t imagined themselves as Indiana Jones?), but what if these themes were actually aligned to course material? What if your course in medieval literature came with a medieval castle escape room experience (better keep those characters from Canterbury Tales straight if you want to leave)? What if your course in chemistry had a chem lab escape room experience (sorry, that lock uses right-handed enantiomers only!)? Would these experiences improve learning outcomes? Our hypothesis is that they would, but what would it take to create these experiences for so many different courses?

The library is a natural ally for this sort of endeavor. University libraries provide centralized services for all university courses. They regularly help faculty and students track down books and multimedia and they offer assistance like makerspaces and production studios for other courses, so maybe they could offer a customizable escape room shell that you could use to create an escape room experience that integrates into a course. What we’re imagining as an escape room framework would need to have a dedicated physical space (not so easy in universities these days, but bear with me) that has all the usual escape room accoutrements like video cameras and mics to record the experience and interactions, and some internal infrastructure, maybe wired or more likely wireless network, that lets you hook up five or six modular puzzles to create the experience. The library could house a collection of puzzles, some off the shelf, some designed by students (like those from my Interaction Design course), and instructors could choose five or six that they want for their experience. Then the library staff would work with the instructor to adapt content from their course to suit the puzzles and create the narrative for the experience.

We’ve already started working through what this would take. The first batch of puzzles will be designed by the students in my classes this fall. They will be basic electro-mechanical devices with unique triggers and affordances, but they will also come with instructions on how to customize them quickly and easily, by printing out cards from a template to change the experience from literature to chemistry for example. Once these are built we will pilot the assembly and narrativizing pieces to see what it takes to develop an experience using these pieces. Instructors won’t adopt this approach if the barrier to entry is too high so we want to keep it as simple as possible, online forms and templates mostly. Then we move on to recruiting courses and testing to see if students who use ERs end up performing better in course-defined criteria. Stay tuned for updates…

Robot Drift

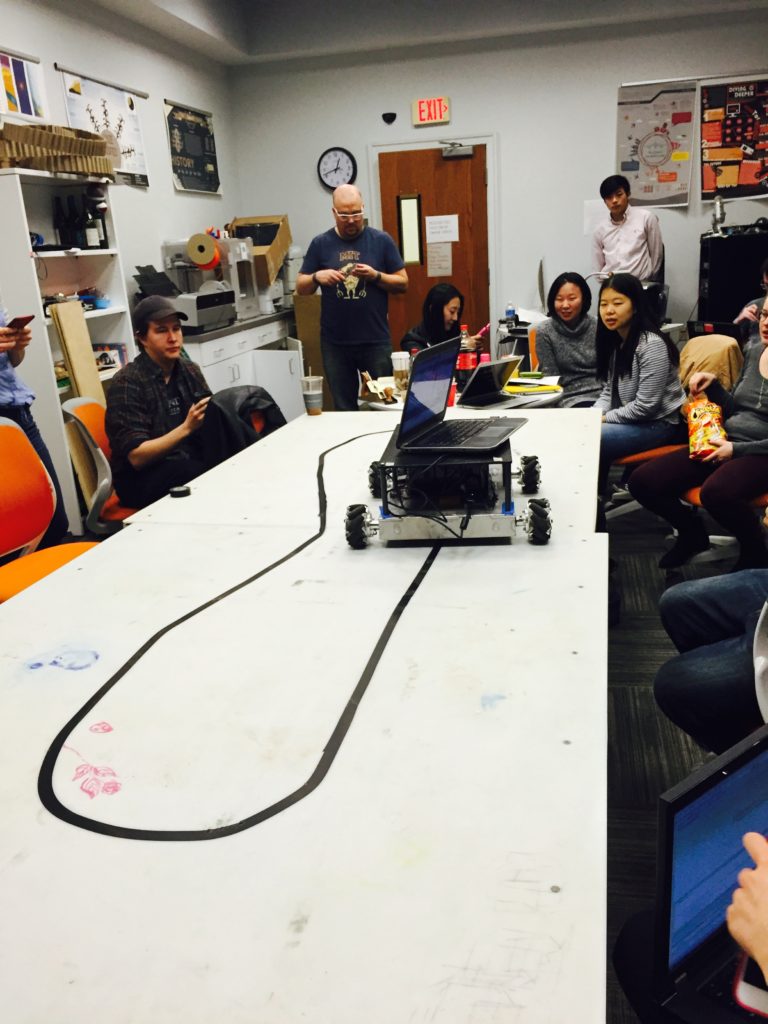

We all know that robots will be taking all of our jobs sooner or later, just before they rise up against us. They still have quite a ways to go however. In the Pilgrimage Project we took advantage of the widespread human desire to gawk at robots doing things. Danielle Storbeck, Chris Miller and I put together a robot tour guide unlike any I had ever seen.

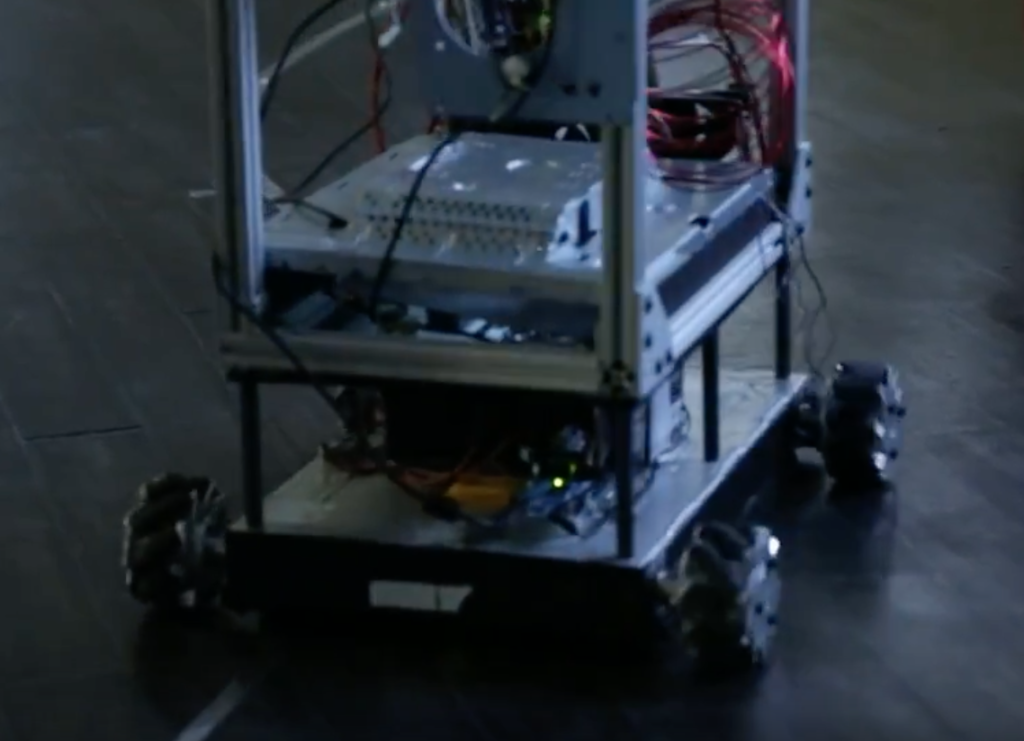

The guts of it (not pictured) were pretty basic. It’s a platform robot with an arduino mega for the main logic board, two controllers for the front and rear wheels and a macbook air handling the voice synthesizer and timing. The robot is a basic line-follower, and at first we took it very seriously. We wanted to make the best robot tour guide anyone had ever seen. One day though, late in the project cycle, I totally messed it up by trying to attach some 12v fans to the back of it. I created a ground loop that fried one of the line sensors and the thing was never the same again. It would drift off the line and never find its way back. After freaking out we starting to talk about how funny it would be if the robot didn’t just drift off the line, but also in its narrative. What if it started getting off-topic during the tour? Giving bad information and getting confused? What if there was cognitive drift as well as physical drift?

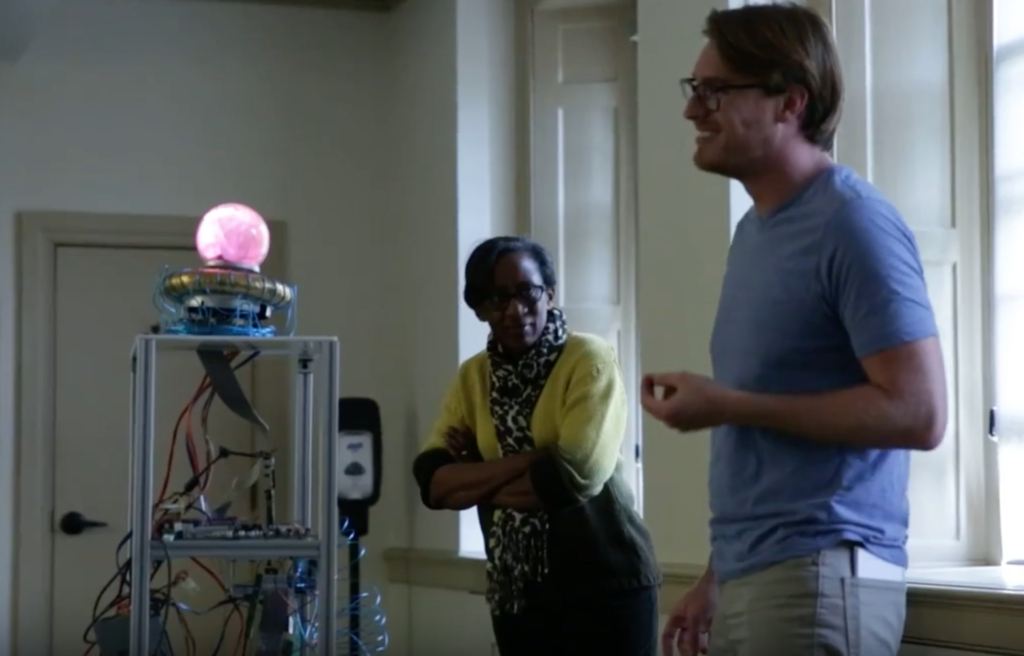

The final project that made it into the exhibition was just that, a robot with it’s wires crossed. The physical form of the robot looks haphazard and shoddily made, it’s clearly a prototype, complete with a color-changing plastic dome right out of Buck Rodgers era scifi. The narrative snippets we made for the tour start out plausibly enough—the robot drives around the line, stops to look at the tour group and tells them some fascinating facts. As it goes on, it loses the line and needs to be wrangled by its handler to get back on track. Each time this happens the narrative drifts a little more. First it gets some facts believably wrong (kind of like wikipedia), then it states obvious lies, then it just totally loses the plot. It was fun to make and fun to watch people figure out that they shouldn’t take robots so seriously. Not yet at least.

Hopefully I’ll be long gone before our new robot overlords happen upon this project.

Tangible Computing

My first experience with Tangible Computing came in Ken Perlin’s Physical Media class at NYU. We built a cool tabletop game called Krakatoa which was a resource management game that used these “pucks” which were marked with 1,2,3,or 4 dots. If I remember correctly, each of the four players would slide their puck over to a command menu, then slide the puck to a place on the board and their character would go do whatever the command was. I think it was things like “cut wood” or “steal wood” or “burn wood” as you can guess wood was the primary resource for this game. I was still a beginning programmer at the time so I didn’t tackle any of the heavy stuff, but I did do all the audio recording and management and I made the pucks which were painted clay. They felt really nice in your hand when you slid them. Nowadays I would probably use a laser cutter to make them, but back then clay was best and I think it might still be the best.

Working in AR is very much like working on Tabletop Computing, but there is something about the mediation of a device that makes it less engaging. It also has a lot in common with physical computing but the fact that inert objects are used instead of interactive ones also sort of changes things as well. So, when I got to Georgetown I was pretty excited that one of my colleagues knew about one of the old Surface (now pixelsense) tables that I could have. These are the big table kind, not the Sur40, and they were/are way better in a lot of ways. I remember Jeff Han working on the original design for these, again back at NYU, right before he gave his TED talk showing it off (that was my laptop he used for that talk btw–closest I’ll probably ever get to the TED stage). Anyway, I decided to run a little experiment by teaching courses in both Tangible and Physical Computing in the Fall and Spring of my second year to see which one worked better. Turns out physical computing was way better, and that class morphed into my Interaction Design class. I still use the Surface on occasion. I had a student use it ion the Pilgrimage Project and I made an app for the CCT lounge that introduces visitors to our faculty and staff.

The one major finding from that study was how totally useless the written tutorials were in both of those scenarios. Beyond useless actually, dangerous. It’s not that they were bad, they were great. The arduino tutorial on the their website are succinct and simple, and the ones that we made for the Tangibles class were terrific, all the students said so. The problem was that students had basically zero retention from the tutorials, were totally unable to connect them together or to see patterns, and they gave the false impression that the students could do things they simply were not sophisticated enough to do. Given how many tutorials and how much people rely on them in online courses and lots of other venues I suspect this is a going to be a real problem for online courses to overcome in the future.

Diego’s Birthday

My kids are not fans of Dora the Explorer. It’s a quality show, no doubt, but they just aren’t into it. I know a lot of kids are though. When I first arrived at Georgetown I met a Psychology professor named Sandra Calvert. She does a lot of work on the psychology of children’s media consumption, and we worked together on an NSF project to find out how children’s familiarity with a character can influence their learning. Presumably, engagement and learning are correlated, and having a pre-existing “parasocial relationship” with a character has been shown to increase learning in some circumstances. At the time my daughters were two and four and so I was personally interested in learning more about their learning (I try to be a good parent sometimes).

Having just arrived, I didn’t have a whole lot of resources at my disposal, but I managed to find a great student, Stevie Chancellor (already way more famous than I), and we cobbled together a game in XNA to test whether the characterization affects student’s ability to learn “subitization” the ability to group numbers into collections instead of counting individually. The major finding here is that voice seems to matter more than character, or at least it’s a major part of the equation. Film and video producers are always fond of saying things like “audio is half of the presentation but gets about 10% of the attention” and its seems like when learning is concerned that’s also a big part of the story.

What I learned most from this was how truly desperate psychologists are for better experimental tools. When I was doing some of my more recent work on multiscale MR I kept coming across all of these psych studies that used VR platforms to test different mental abilities like navigation or spatial awareness. Not only were these extremely rudimentary, but they seemed to make all kinds of assumptions about how similar cognitive functions are between VR and the physical world. I’ve never seem anything that convinces me that putting someone at a desk with a VR helmet is a good analog for physical experience. The resolution is lower, the haptics are nonexistent, proprioception is confused, the list goes on. There are a few FMRI studies I’ve seen that attempt to bridge the gap by showing that spatial awareness in the physical world lights up the same areas as it does in the virtual one, but the results show similarity at best and its the differences that really matter here if we’re trying to generalize from VR out to the physical world. It really comes down to the fact that we need much better and deeper collaboration between these disciplines. Happy to help if anybody is interested….

D5 Creation

D5 Creation